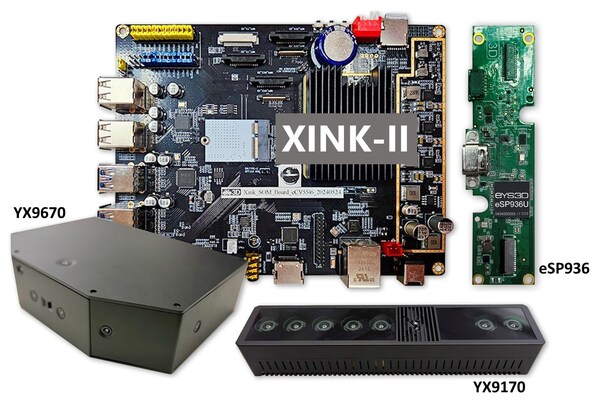

eYs3D Microelectronics Unveils Multi-Sensor Controller IC eSP936, YX9170 Spatial Perception Solution, and YX9670 Navigation Solution for Unmanned Vehicles

Integrated with eYs3D's Proprietary XINK-ll Edge Spatial Computing Platform and the Sense and React™ Human-Machine Interaction Development Interface, These Innovations Provide Robust Support for Human-Machine Interfaces in Next-Generation AI Applications

With the rapid rise of AI image sensing and edge computing technologies, the market for robots and intelligent unmanned vehicles is experiencing explosive growth. Etron Technology, subsidiary eYs3D Microelectronics, has launched the new eSP936 multi-sensor image controller IC. The eSP936 supports the synchronous processing of data from up to seven visual sensors, providing high image recognition accuracy. Paired with the Sense and React™ human-machine interaction developer interface, it enables intelligent control through human-machine interaction, becoming a key driver for the implementation of smart applications.

The eSP936 can be integrated with multi-modal visual language models (VLM), combining multiple visual sensors with real-time AI edge computing capabilities. It is suitable for smart application scenarios such as unmanned vehicles like automated guided vehicles, autonomous mobile robots, and drones. The eSP936 processes multiple 2D images at high speeds and generates 3D depth maps, enhancing precise environmental recognition. Embedded AI chips enable dynamic navigation in complex environments. Additionally, industrial and service robots can achieve more precise intelligent perception in complex scenarios, combining real-time computing and automated operations for high-efficiency performance.

YX9170 Spatial Awareness Solution

At CES 2025, eYs3D unveils the YX9170 spatial awareness solution. Powered by the eSP936 multi-sensor image controller chip and the XINK-ll edge spatial computing platform, this innovation enables advanced multi-sensor fusion and AI-driven technologies to deliver breakthroughs in spatial perception and recognition. It is designed for industrial robots, service robots, and autonomous vehicles, ensuring high perception range and recognition accuracy.

The YX9170 features dual-depth sensors, synchronises four RGB cameras, and supports multiple stereo lens baseline integrations. Embedded AI algorithms enable real-time multi-object recognition, reducing computational load and latency by 30%.

YX9670 Navigation Solution

eYs3D also introduces the YX9670 navigation solution for autonomous vehicles. This system integrates dual-depth sensors, synchronises four RGB cameras for a 278-degree panoramic view, and includes a thermal imaging sensor and an AHRS for vessel coordination. With real-time AI-driven navigation direction analysis and multi-target tracking, it delivers high recognition efficiency even in challenging environments. Successfully deployed in autonomous vessel navigation, the YX9670 supports logistics vessels, environmental monitoring ships, and specialised unmanned marine applications.

XINK-ll Edge Spatial Computing Platform and Sense and React™ Toolkit

eYs3D debuts its XINK-ll Edge Spatial Computing Platform at CES 2025. Equipped with an AI chip featuring ARM Cortex-A and Cortex-M CPU cores, the platform supports AHRS, thermal imaging, and radar sensors, while incorporating CNN technology for enhanced edge AI device capabilities. It supports popular AI frameworks like TensorFlow and PyTorch, offering flexible development capabilities.

The Sense and React™ Toolkit combines LLM (Large Language Models) and CNN sensing technology, enabling environmental awareness and conversational human-machine interaction. This marks a new era in interface technology, enhancing intelligent user experiences through natural language prompts and action commands.