Databricks and NVIDIA Deepen Collaboration to Accelerate Data and AI Workloads with the Data Intelligence Platform

Databricks, the Data and AI company, today announced an expanded collaboration and commitment to deeper technical integrations with NVIDIA during the company’s flagship GTC 2024 conference. Together, Databricks and NVIDIA will optimise data and AI workloads on the Databricks Data Intelligence Platform. The collaboration builds on NVIDIA’s recent participation in Databricks’ Series I funding round.

“We’re thrilled about the evolution of our partnership with NVIDIA that will drive more value for customers through the advancement of Databricks workloads with NVIDIA accelerated computing and software,” said Ali Ghodsi, co-founder and CEO of Databricks.

“From analytics use cases through AI, NVIDIA has already powered our foundational model initiatives, and with our mutual work on query acceleration, we’ll be able to demonstrate value for more enterprises.”“Every company’s proprietary data is a crucial asset for creating intelligence in the era of AI,” said Jensen Huang, founder and CEO of NVIDIA. “By accelerating data processing, NVIDIA and Databricks can supercharge AI development and deployment for enterprises seeking greater insights and better outcomes with more efficiency.”

GPU acceleration for end-to-end generative AI solutions

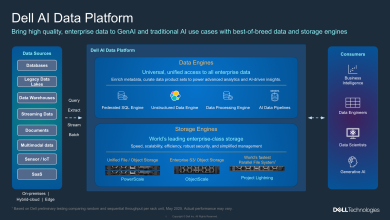

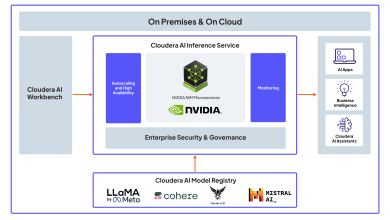

Organisations are rapidly adopting Databricks’ Data Intelligence Platform to build and customise generative AI solutions trained on their data and tailored to their business and domain. Databricks Mosaic AI and NVIDIA are collaborating on model training and inference to advance the state of building and deploying generative AI models on Databricks’ end-to-end platform. Databricks offers a comprehensive set of tools for building, testing and deploying generative AI solutions with uncompromising control and governance over both data and models.

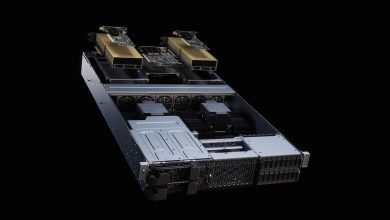

For generative AI model training, Databricks Mosaic AI relies on NVIDIA H100 Tensor Core GPUs, which are optimised for developing LLMs. Mosaic AI is able to harness the power of NVIDIA accelerated computing and offer an efficient and scalable platform for customising LLMs for customers.

For model deployment, Databricks leverages NVIDIA accelerated computing and software throughout the stack. A key component of Databricks’ Mosaic AI Model Serving is NVIDIA TensorRT-LLM software, which delivers state-of-the-art performance and ensures the solution is cost-effective, scalable and performant. Mosaic AI was a TensorRT-LLM launch partner and enjoys a close technical collaboration with the NVIDIA team.

Photon with NVIDIA accelerated computing speeds query performance

Databricks plans to develop native support for NVIDIA accelerated computing into its next-generation vectorised query engine, Photon, to deliver improved speed and efficiency for customers’ data warehousing and analytics workloads. Photon powers Databricks SQL, Databricks’ serverless data warehouse, with industry-leading price-performance and TCO. This advances and builds on the growth of Databricks customers using GPUs for query processing on their data.

Databricks for machine learning and deep learning

Machine learning (ML) and deep learning on Databricks have been critical workloads. Databricks Machine Learning delivers pre-built deep learning infrastructure to include NVIDIA GPUs, and the Databricks Runtime for ML includes pre-configured GPU support, including drivers and libraries. With these tools, users can get started quickly with the right NVIDIA infrastructure and keep the environment consistent across users. Databricks supports NVIDIA Tensor Core GPUs on all three major clouds to enable high-performance single-node and distributed training for ML workloads. The companies plan to further the momentum of the Data Intelligence Platform, enabling more organisations to create the next generation of data and AI applications with quality, speed, and agility.