The SAS 2023 global roadshow stopped in Singapore, with executives from SAS presenting to an audience that had travelled from across Southeast Asia to hear how the pioneer of computing-based statistics—and, lately, a leader in Artificial Intelligence (AI)-based analytics—is developing its strategy and offerings.

Amir Sohrabi, Regional Vice President and Head of Digital Transformation, Asia and Korea, at SAS, kicked off the proceedings and warned us that the days of linear thinking, where we make progress one step at a time, are long gone. According to him, companies that progress at that pace will become digital dinosaurs. Instead, progress (and thinking) in today’s world needs to be exponential, where 2 ideas become 4, 4 become 16, and 16 becomes 256—you get the picture. AI-driven analytics is the tool that opens this path.

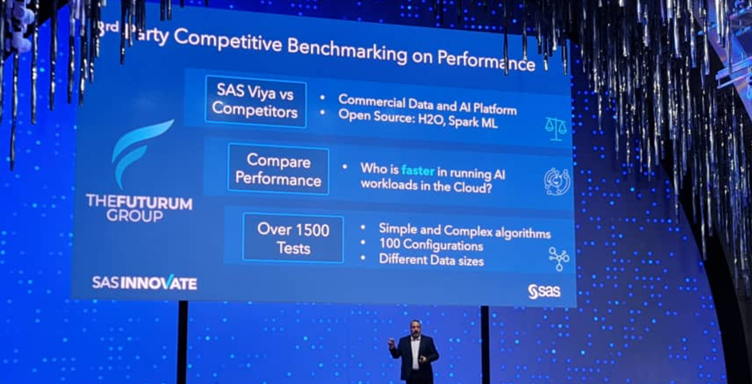

Later, Amir would tell us the one thing he wants everyone to take away is how SAS runs cloud analytics 30 times faster than open source or other commercial offerings. In fact, when it comes to commercial offerings, SAS is 56 times faster.

Amir and the rest of the SAS team weren’t asking us to believe them on this point; they have the figures to back up their claims. These figures come from The Futurum Group, which conducted 1,500 tests over 100 cloud configurations in order to come up with these numbers.

The Three Pillars of AI

The real “meat” of the keynote session started with Bryan Harris, Executive Vice President and Chief Technology Officer at SAS, who to took time to highlight the recent developments in SAS solutions and strategy to underline “who” the company is today and what drives them to keep innovating.

Among these developments are things like accelerating to value and outpacing the competition of tomorrow. But the phrase that stuck with me was when Bryan stated SAS is about helping their customers make better and faster business decisions than their competition. I guess any software company that can deliver that will be loved by their customers—and, based on 50 years of success, customers do seem to continue to love SAS.

Bryan covered and crammed a lot of valuable information into a very short amount of time. Critically, he broke down what I will term “the three pillars of AI,” which SAS themselves believe are critical for any AI Analytics platform.

These pillars are Productivity, Performance, and Trust.

For SAS, Productivity is about automating and “removing friction” from every task in the analytics flow. Performance is about optimising the platform to be fast and efficient, and with SAS, it is clear they are maniacal about cloud-optimising their platform. Trust, in the view of this lay observer, is arguably the most important aspect. In an era when we increasingly rely on algorithms, code, and AI to make decisions for us, it is vital to have results that are traceable, transparent, and accurate without question. Bryan assured us that as SAS develops their platform, these three tenets will remain at the heart of what they do.

Bryan shared with us that productivity is a big issue, with most companies spending 80% of their analytics effort and resource cleaning and preparing data, and only 20% of the time actually analysing it (a frightening statistic if you think about it). He told us that SAS are committed to helping people reverse that statistic, which was a good segue into the next presenter, Shadi Shahin, VP of Product Strategy at SAS, who focused on looking at why productivity in analytics is so hard to achieve.

Addressing the Challenges of Productivity in Analytics

Clearly, if SAS intend to reverse the 80/20 split and have more companies spending 80 of their time actually analysing data, driving far more efficient productivity is non-negotiable. Shadi ran through the challenges and offered up what SAS is doing to counter them.

The starting point, according to Shadi, is to ensure that the case for transformation is understood and agreed upon at the highest levels within your organisation.

Once you have that, organisations need to harness the experience and build it into systems and processes. Doing so enables less experienced staff to operate and achieve the outcomes you expect from more experienced people.

Shadi also explained that you need to remove friction from every “handover point” in the analytics workflow. Examples he gave include bringing in a new coder who works better in a different coding language. Rather than retraining that coder in the language you currently use, have a system that can use any language and let them be productive from day one using the knowledge they joined you with.

Another example was building AI into the data cleaning process, so even if you are short on data scientists/wranglers, you can have less experienced people get prompted by AI on what data should be cleaned and then automate the execution of the cleaning itself.

Delving Into the 30x Faster Mantra

The subject of performance was left for Udo Sglavo, Vice President of Advanced Analytics at SAS, to cover. Given Amir’s earlier proclamation that the one thing he wanted the audience to remember was the 30x faster mantra—no pressure on Udo then!

However, he was up to the challenge and did a great job of explaining the amount of engineering and development work that has gone into truly cloud-optimising the whole SAS AI analytics offering. The performance numbers are no lucky coincidence and, in fact, are compelling.

The 30x performance number is really about how SAS performs against open-source analytics engines, and as we know, open source is not for everyone. When compared to other commercial offerings, the performance advantage is even more compelling—with 49x faster performance on general analytics workloads and 326x faster performance on “complex” analytics models.

SAS is cloud agnostic, but what Udo pointed out is that by running faster in every cloud, analytics with SAS will rack up much lower cloud bills than doing so with their competitors. Later, I had the chance to have a one-on-one chat with Udo, and he confirmed that SAS is already thinking about offering the functionality where it will be able to simulate a complex model and predict the performance and cost implications for each public cloud, allowing clients to select which cloud to run large algorithms based on cost and speed.

The concept of Trust was covered by Eric Yu, Principal Solutions Architect within the Data Ethics Practice at SAS, who pointed out that while the world is getting excited about the possibilities of AI (especially generative AI and LLMs), it actually has the potential to erode trust in your analytics platform.

Eric noted that the more you automate or pass decision-making to AI, the more potential for it to come back with inaccurate answers. SAS address this head-on by using only the appropriate type of AI for the task at hand, and even more importantly, by providing transparent traceability back into the platform. A human is able to pull logs and understand every step that led to data, prediction, or an answer being served up. This level of transparency is critical to being able to implicitly trust the outcomes your analytics platforms deliver.

Eric summed it up with the term “Human Centricity”, a concept that he assured us is baked into SAS’s solutions.

The last words (from my perspective) from the keynotes at SAS Innovate Singapore 2023 go to Bryan when he told us, “Information overload is exceeding human capacity.” It is a frighteningly true statement, and the only way to make business sense of business information overload is to embrace AI analytics.

Guess what? That’s where you might want to start a conversation with SAS…